These speeds are reported by Windows explorer file copy in Windows 10 vm in qemu 4.0. When writing to a WD Black 7200 rpm hard disk with “writethrough” cache mode it gets speeds of about 30 MB/sec and but using “none” cache mode I get about 200 MB/sec.

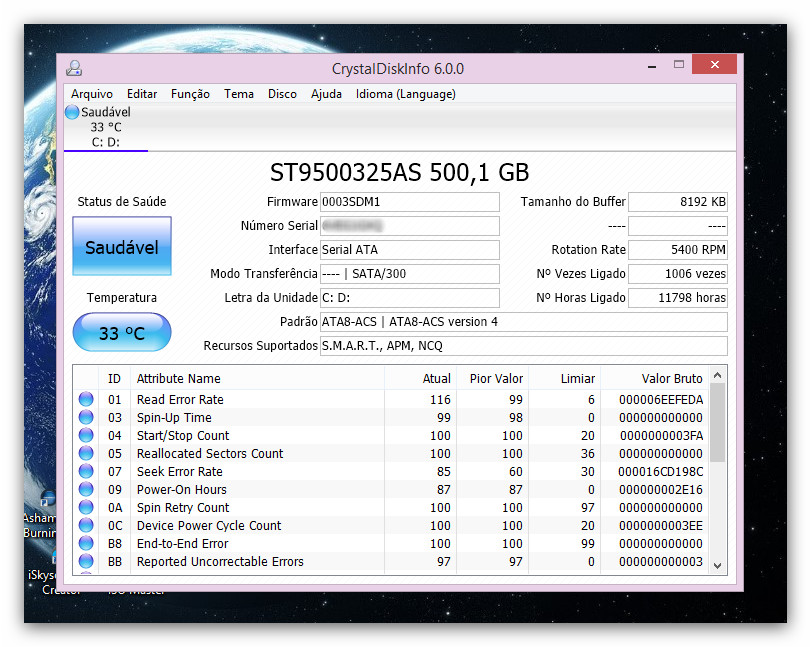

For example, in qemu there is “writethrough” cache mode which uses the host cache and there is “none” cache mode which support direct IO semantics. I’ve found an exchange of words between Linus Torvalds and Dave Chinner from about 5 months ago where Linus declared BS on Dave’s statement, “That said, the page cache is still far, far slower than direct IO”.ĭave is right and I know that from personal experience. I’ve found this mailing list discussing supporting direct IO for tmpfs and most people are actually in favor of supporting direct IO for tmpfs so I don’t understand why within these last 12 years (since the last post in that mailing list) hasn’t direct IO been supported for tmpfs…except that Linus Torvalds seems to hate(?) it. The only reason I can see that would make the Linux RAM disks slower is their lack of support of direct IO. In Windows 10, the bench mark results I got from using CrystalDiskmark on a RAM disk created by ImDisk gave results of about 7 GB/sec for sequential reads (queue depth=32, threads=1), about 10 GB/sec for sequential writes. Using dd to benchmark ramfs and tmpfs showed results that gave similar numbers(i.e. I’m getting about 2.2 GB/sec write speed from Samsung 960 Pro (2 in RAID 0) to ramfs and tmpfs while I was expecting speeds to exceed 6 GB/sec. The disk images are typically 40GB or more but the RAM disks in Linux are very slow. My use cases include loading disk images into RAM disk from Samsung 960 Pro nvme SSD.

0 kommentar(er)

0 kommentar(er)